By Jonathan Graff, MBA, ICD.

As a wave of cost-cutting sweeps through Silicon Valley, executives have whittled down teams assigned to combating problematic content. But such belt-tightening could have important implications for online platforms. And security teams conducting any type of social media threat intelligence should take notice.

Following the 2016 U.S. presidential election, tech giants started cracking down on the deluge of toxic posts flooding their networks. This involved a massive investment in AI to limit the reach of fake news. Record profits also allowed these businesses to hire legions of moderators and policy experts to investigate content.

But a plunge in ad revenues has forced the industry to retrench. This process started with new privacy guidelines from Apple, which made it harder for marketers to track users on iOS devices. More recently, the new short video app TikTok has swiped millions of users from rivals, and higher interest rates have forced customers to dial back ad spending. All of this has left incumbent platforms to split an increasingly smaller pie of online advertising spend.

To stymie losses, social media companies have slashed budgets for content moderation. Admittedly, issues like extremism and child safety remain a priority. But layoffs mean fewer resources to address fake news, combat disinformation campaigns, or take down inappropriate posts. These problems have become particularly acute outside the United States, where international moderation teams have always struggled to get sufficient funding.

For security teams, this new era demands a fresh approach to social media threat intelligence. As reality sinks in, they’re scrambling to adjust to the new rules. They should focus on three.

Learn More: How to Use OSINT to Safeguard Your Company’s Reputation Online

The Future of Social Media Threat Intelligence

One is brand impersonation will become a bigger concern for businesses. Recently, a wave of accounts impersonating influential companies triggered chaos online. Gaming giant Nintendo had to apologize after an impostor published an image of their famous Super Mario character giving the middle finger. A spoofed handle for PepsiCo posted, "Coke is better." An account mimicking Eli Lilly & Co announced that insulin was free – sending shares of the pharmaceutical giant plunging almost five percent. BP, Apple, and Lockheed Martin were also impersonated by pranksters as well as the accounts of various celebrities.

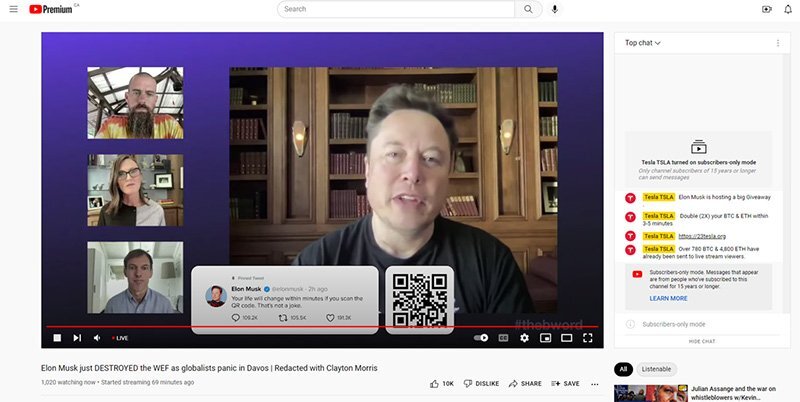

Not all of these incidents, however, have such humorous intentions. Last year, the BBC reported a new scam where criminals would stream deep-fake videos of tech billionaire Elon Musk promoting bogus cryptocurrency giveaways. Wallet transaction history shows the fraudsters stole over $128.0 million from victims.

A YouTube Livestream advertising a bogus cryptocurrency giveaway offer by impersonating Tesla CEO Elon Musk.

A YouTube Livestream advertising a bogus cryptocurrency giveaway offer by impersonating Tesla CEO Elon Musk.

Such episodes can have serious consequences. Companies invest enormous resources to build a relationship with the public. But if bad actors have carte blanche to impersonate brands online, this reputation could be jeopardized. Eventually, customers, employees, or partners may avoid these organizations in favor of businesses that are seen as more trustworthy.

The second rule is mis- and disinformation outside of the United States will become even more problematic. As mentioned, international moderation teams have long struggled to get sufficient funding to police content. But with the pressure to slash costs, these groups were first in line to see their budgets chopped.

Communities that speak different languages within the United States represent similar hotbeds for fake news and rumors. A recent analysis by Avaaz found that social networks failed to flag 70% of Spanish-language misinformation surrounding COVID-19. By comparison, moderators missed just 29% of such false claims in English. The researchers said such discrepancies result from platforms failing to provide adequate funding for fact-checking in languages other than English. And as a result, misleading or dangerous content in these communities can thrive.

Once again, this presents an unexpected risk for businesses. Most analysts conducting social media threat intelligence will naturally gravitate to content in their home countries or native tongue. But this bias makes it easy to overlook problematic content published in other languages or outside the United States. Issues such as counterfeiting, brand abuse, or employee impersonation could be occurring out in the open. But these threats are easy to miss if your team has not even considered the different communities that co-exist online. And thanks to recent cutbacks in content moderation, these forums will represent a bigger risk for security teams in 2023.

The third rule is social networks will become more decentralized. Following the January 6th riots at the U.S. Capitol, millions of right-wing users fled established social media platforms for a collection of alt-tech networks – like Gab, Parler, Telegram, and Rumble – that promised less moderation. A similar phenomenon occurred in late-2022 following Elon Musk’s takeover of Twitter. Looser content moderation rules triggered an exodus from the microblogging service, as left-wing users fled the site to join rival Mastodon.

The changing online landscape creates new problems for security teams conducting social media threat monitoring. Previously, analysts only had to keep tabs on a handful of big platforms. But now, users have dispersed across dozens of sites. And if security leaders fail to watch these new communities, they could overlook threats hiding right in plain sight.

How Should Security Teams Respond?

Firstly, analysts must cast a wider net for data collection. That means looking beyond the biggest social platforms or scanning for threats in your native language. If your organization primarily conducts business in North America, start watching for brand abuse internationally. If you monitor the biggest platforms, keep tabs on the growing collection of alt-tech social networks. If you're searching for cases of mis- or disinformation in English, consider other languages as well.

Second, use OSINT tools to automate routine processes where possible. The sheer size of the web makes conducting effective social media threat intelligence through manual collection almost impossible. Users upload terabytes of content each minute. And even the most well-staffed organization cannot possibly shift through all of this information. But by exploiting technology to automate data collection and surveillance, analysts can spend more time investigating threats rather than trolling through posts.

Third, keep a close eye on the shifting social media landscape. Security leaders have a lot on their plate. But unless they have teenagers, most probably don’t keep tabs on the latest apps making waves online. New communities, however, pop up all the time. And users migrate from one site to another. Failure to keep up with these changes can dramatically reduce the effectiveness of your web surveillance program.

The bottom line, moderation teams will have a lot fewer resources to police content in the months ahead. For bad actors, that will create more opportunities to spread misinformation, abuse brands, and harass victims. And for a cohort of analysts, they must come to grips with this new reality to keep their customers and organization safe.